Posted by Jason Jabbour, Kai Kleinbard and Vijay Janapa Reddi (Harvard University)

Posted by Jason Jabbour, Kai Kleinbard and Vijay Janapa Reddi (Harvard University)

Everyone wants to do the modeling work, but no one wants to do the engineering.

If ML developers are like astronauts exploring new frontiers, ML systems engineers are the rocket scientists designing and building the engines that take them there.

Introduction

"Everyone wants to do modeling, but no one wants to do the engineering," highlights a stark reality in the machine learning (ML) world: the allure of building sophisticated models often overshadows the critical task of engineering them into robust, scalable, and efficient systems.

The reality is that ML and systems are inextricably linked. Models, no matter how innovative, are computationally demanding and require substantial resources—with the rise of generative AI and increasingly complex models, understanding how ML infrastructure scales becomes even more critical. Ignoring the system's limitations during model development is a recipe for disaster.

Unfortunately, educational resources on the systems side of machine learning are lacking. There are plenty of textbooks and materials on deep learning theory and concepts. However, we truly need more resources on the infrastructure and systems side of machine learning. Critical questions—such as how to optimize models for specific hardware, deploy them at scale, and ensure system efficiency and reliability—are still not adequately understood by ML practitioners. This lack of understanding is not due to disinterest but rather a gap in available knowledge.

One significant resource addressing this gap is MLSysBook.ai. This blog post explores key ML systems engineering concepts from MLSysBook.ai and maps them to the TensorFlow ecosystem to provide practical insights for building efficient ML systems.

The Connection Between Machine Learning and Systems

Many think machine learning is solely about extracting patterns and insights from data. While this is fundamental, it’s only part of the story. Training and deploying these "deep" neural network models often necessitates vast computational resources, from powerful GPUs and TPUs to massive datasets and distributed computing clusters.

Consider the recent wave of large language models (LLMs) that have pushed the boundaries of natural language processing. These models highlight the immense computational challenges in training and deploying large-scale machine learning models. Without carefully considering the underlying system, training times can stretch from days to weeks, inference can become sluggish, and deployment costs can skyrocket.

Building a successful machine-learning solution involves the entire system, not just the model. This is where ML systems engineering takes the reins, allowing you to optimize model architecture, hardware selection, and deployment strategies, ensuring that your models are not only powerful in theory but also efficient and scalable.

To draw an analogy, if developing algorithms is like being an astronaut exploring the vast unknown of space, then ML systems engineering is similar to the work of rocket scientists building the engines that make those journeys possible. Without the precise engineering of rocket scientists, even the most adventurous astronauts would remain earthbound.

Bridging the Gap: MLSysBook.ai and System-Level Thinking

One important new resource this blog post offers for insights into ML systems engineering is an open-source "textbook" — MLSysBook.ai —developed initially as part of Harvard University's CS249r Tiny Machine Learning course and HarvardX's TinyML online series. This project, which has expanded into an open, collaborative initiative, dives deep into the end-to-end ML lifecycle.

It highlights that the principles governing ML systems, whether designed for tiny embedded devices or large data centers, are fundamentally similar. For instance, while tiny machines might employ INT8 for numeric operations to save resources, larger systems often utilize FP16 for higher precision—the fundamental concepts, such as quantization, span across both scenarios.

Key concepts covered in this resource include:

- Data Engineering: Setting the foundation by efficiently collecting, preprocessing, and managing data to prepare it for the machine learning pipeline.

- Model Development: Crafting and refining machine learning models to meet specific tasks and performance goals.

- Optimization: Fine-tuning model performance and efficiency, ensuring effective use of hardware and resources within the system.

- Deployment: Transitioning models from development to real-world production environments while scaling and adapting them to existing infrastructure.

- Monitoring and Maintenance: Continuously tracking system health and performance to maintain reliability, address issues, and adapt to evolving data and requirements.

In an efficient ML system, data engineering lays the groundwork by preparing and organizing raw data, which is essential for any machine learning process. This ensures data can be transformed into actionable insights during model development, where machine learning models are created and refined for specific tasks. Following development, optimization becomes critical for enhancing model performance and efficiency, ensuring that models are tuned to run effectively on the designated hardware and within the system's constraints.

The seamless integration of these steps then extends into the deployment phase, where models are brought into real-world production environments. Here, they must be scaled and adapted to function effectively within existing infrastructure, highlighting the importance of robust ML systems engineering. However, the lifecycle of an ML system continues after deployment; continuous monitoring and maintenance are vital. This ongoing process ensures that ML systems remain healthy, reliable and perform optimally over time, adapting to new data and requirements as they arise.

|

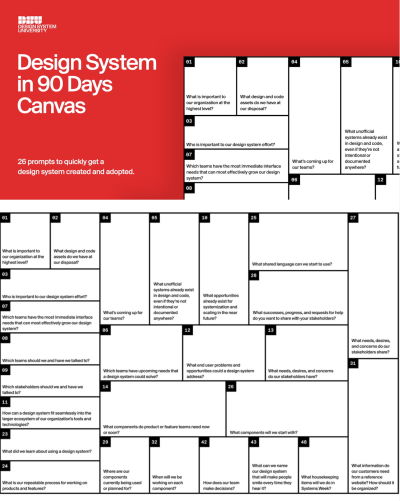

| A mapping of MLSysBook.AI's core ML systems engineering concepts to the TensorFlow ecosystem, illustrating how specific TensorFlow tools support each stage of the machine learning lifecycle, ultimately contributing to the creation of efficient ML systems. |

SocratiQ: An Interactive AI-Powered Generative Learning Assistant

One of the exciting innovations we’ve integrated into MLSysBook.ai is SocratiQ—an AI-powered learning assistant designed to foster a deeper and more engaging connection with content focused on machine learning systems. By leveraging a Large Language Model (LLM), SocratiQ turns learning into a dynamic, interactive experience that allows students and practitioners to engage with and co-create their educational journey actively.

With SocratiQ, readers transition from passive content consumption to an active, personalized learning experience. Here’s how SocratiQ makes this possible:

- Interactive Quizzes: SocratiQ enhances the learning process by automatically generating quizzes based on the reading content. This feature encourages active reflection and reinforces understanding without disrupting the learning flow. Learners can test their comprehension of complex ML systems concepts.

- Adaptive, In-Content Learning: SocratiQ offers real-time conversations with the LLM without pulling learners away from the content they're engaging with. Acting as a personalized Teaching Assistant (TA), it provides tailored explanations.

- Progress Assessment and Gamification: Learners’ progress is tracked and stored locally in their browser, providing a personalized path to developing skills without privacy concerns. This allows for evolving engagement as the learner progresses through the material.

SocratiQ strives to be a supportive guide that respects the primacy of the content itself. It subtly integrates into the learning flow, stepping in when needed to provide guidance, quizzes, or explanations—then stepping back to let the reader continue undistracted. This design ensures that SocratiQ works harmoniously within the natural reading experience, offering support and personalization while keeping the learner immersed in the content.

We plan to integrate capabilities such as research lookups and case studies. The aim is to create a unique learning environment where readers can study and actively engage with the material. This blend of content and AI-driven assistance transforms MLSysBook.ai into a living educational resource that grows alongside the learner's understanding.

Mapping MLSysBook.ai's Concepts to the TensorFlow Ecosystem

MLSysBook.AI focuses on the core concepts in ML system engineering while providing strategic tie-ins to the TensorFlow ecosystem. The TensorFlow ecosystem offers a rich environment for realizing many of the principles discussed in MLSysBook.AI. This makes the TensorFlow ecosystem a perfect match for the key ML systems concepts covered in MLSysBook.AI, with each tool supporting a specific stage of the machine learning process:

- TensorFlow Data (Data Engineering): Supports efficient data preprocessing and input pipelines.

- TensorFlow Core (Model Development): Central to model creation and training.

- TensorFlow Lite (Optimization): Enables model optimization for various deployment scenarios, especially critical for edge devices.

- TensorFlow Serving (Deployment): Facilitates smooth model deployment in production environments.

- TensorFlow Extended (Monitoring and maintenance): Offers comprehensive tools for ongoing system health and performance.

Note that MLSysBook.AI does not explicitly teach or focus on TensorFlow-specific concepts or implementations. The book's primary goal is to explore fundamental ML system engineering principles. The connections drawn in this blog post to the TensorFlow ecosystem are simply intended to illustrate how these core concepts align with tools and practices used by industry practitioners, providing a bridge between theoretical understanding and real-world application.

Support ML Systems Education: Every Star Counts 🌟

If you find this blog post valuable and want to improve ML systems engineering education, please consider giving the MLSysBook.ai GitHub repository a star ⭐.

Thanks to our sponsors, each ⭐ added to the MLSysBook.ai GitHub repository translates to donations supporting students and minorities globally by funding their research scholarships, empowering them to drive innovation in machine learning systems research worldwide.

Every star counts—help us reach the generous funding cap!

Conclusion

The gap between ML modeling and system engineering is closing, and understanding both aspects is important for creating impactful AI solutions. By embracing ML system engineering principles and leveraging powerful tools like those in the TensorFlow ecosystem, we can go beyond building models to creating complete, optimized, and scalable ML systems.

As AI continues to evolve, the demand for professionals who can bridge the gap between ML algorithms and systems implementation will only grow. Whether you're a seasoned practitioner or just starting your ML journey, investing time in understanding ML systems engineering will undoubtedly pay dividends in your career and the impact of your work. If you’d like to learn more, listen to our MLSysBook.AI podcast, generated by Google’s NotebookLM.

Remember, even the most brilliant astronauts need skilled engineers to build their rockets!

Acknowledgments

We thank Josh Gordon for his suggestion to write this blog post and for encouraging and sharing ideas on how the book could be a useful resource for the TensorFlow community.